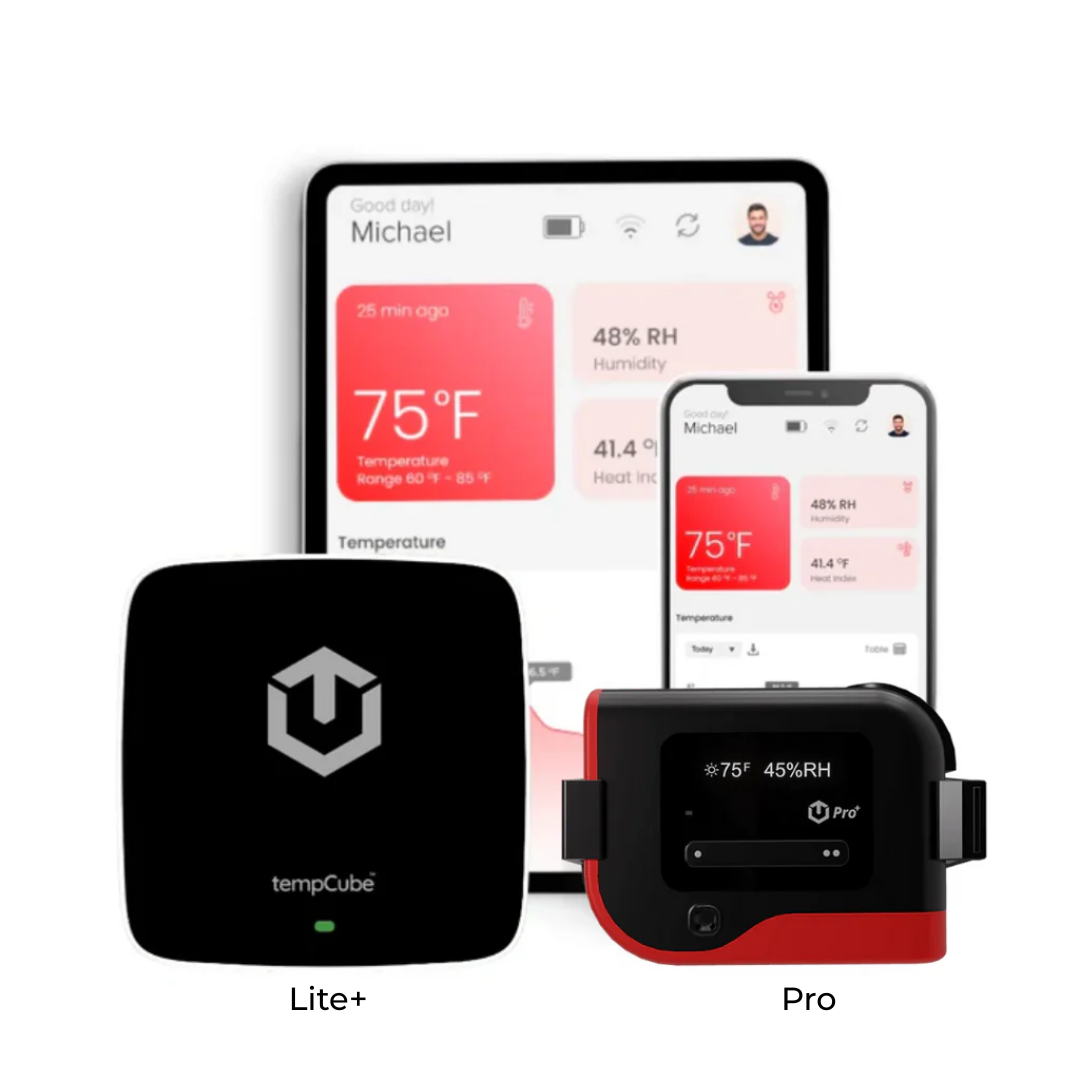

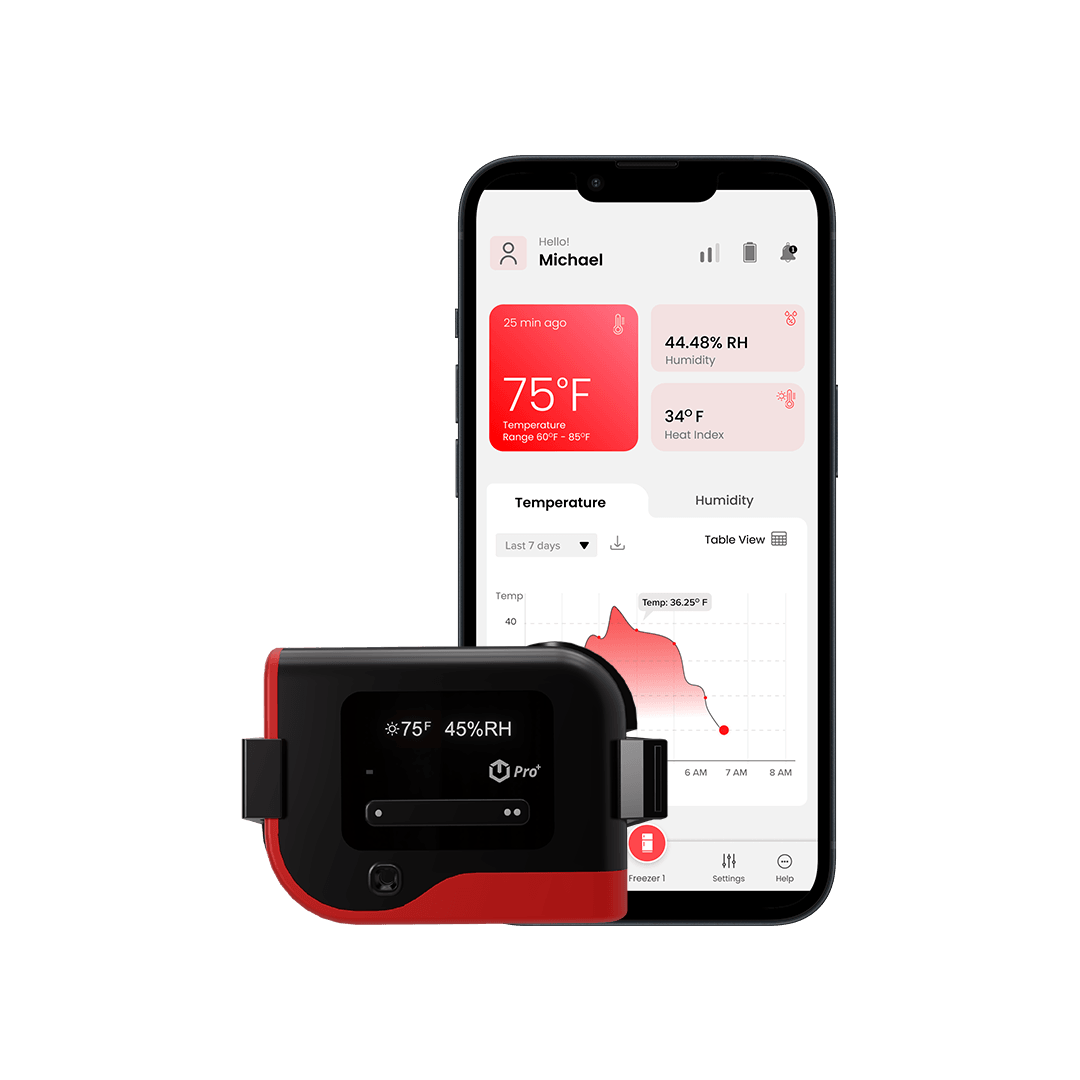

As server rooms increase in size and complexity to support digital growth, reactive temperature monitoring is no longer sufficient. Big data aggregation and analytics solutions like Tempcube provide a proactive approach to thermal regulation through trend identification, predictive modeling and risk avoidance. By leveraging data from Internet of Things sensors and monitoring platforms, server room managers gain comprehensive visibility across environments to optimize stability and resource efficiency.

Data-Driven Insights for Precision Management

With hundreds of data points recorded each second from across server room environments, temperature big data is continuously generated and streamed for analysis. Monitoring solutions like Tempcube aggregate this data in a centralized platform to enable:

•Long term trend analysis to see patterns over weekly, monthly and yearly periods. This makes it possible to anticipate seasonal changes, equipment performance issues and potential thermal events in advance.

•Machine learning algorithms that detect anomalies and provide predictive risk analysis for fluctuations before temperature thresholds are breached. This proactive modeling allows for adjustments to avoid instability.

•Custom event correlation using data from other systems like security, access control, networking and applications. By combining datasets, root causes of temperature changes can be identified faster for remediation.

•Comparative analysis between server room environments to optimize cooling resource allocation and ensure consistent regulation levels based on IT infrastructure demands. This reduces energy consumption and costs.

•Automated reporting that delivers both high-level and granular insights into current and historical server room temperature performance. Customized reports can be generated on-demand for specific dates, time ranges, locations and events.

With data-powered monitoring in place, server room temperature regulation is no longer reactive but proactively managed through advanced analytics, modeling and risk avoidance for streamlined stability and efficiency. Environments secured for continuity constantly and costs contained.

From Reactive to Predictive: The Value of Trend Analysis

Without historical data and trending, server room temperature monitoring relies solely on threshold alerts to signify an issue, by which time damage or disruption may have already occurred. With big data aggregation, trends become visible for:

•Gradual increases or spikes over days or weeks indicating a cooling system problem or hot spot buildup that requires maintenance before an emergency state. Early trend detection allows for servicing during standard operating hours at lower cost.

•Repeating fluctuations linked to seasonal changes, software updates or system events. Recurring trends can be mapped with automated adjustments to eliminate instability, downtime and equipment stress during these periods.

•Sporadic variations that point to potential failures for proactive replacement of parts like fans, pumps, compressors and other cooling infrastructure before total shut down. Trend insights optimize maintenance scheduling and budgets.

Predictive Modeling: From Trends to Risk Anticipation

With historical data analysis, trends provide visibility into patterns of change over time. Predictive modeling utilizes machine learning and data mining to anticipate risks before trends become emergencies based on probability analysis. Monitoring platforms like Tempcube leverage vast datasets to enable:

•Automated anomaly detection that flags unusual temperature variations the moment they spike outside of normal ranges based on historical baselines. These alerts trigger investigation into root causes before damage results.

•Risk modeling that assesses the likelihood of certain events like extreme heat waves, HVAC failures or hot spot buildups based on current conditions. Server room managers can evaluate risks to take proactive measures in advance such as increasing cooling capacity or servicing equipment.

•Scenario simulation using data from additional systems like weather services, equipment management platforms and infrastructure monitoring. Various scenarios can be created based on external factors to determine vulnerabilities and plan interventions when risks are high during specific periods.

•Custom predictive rules and machine learning processes that tap into data unique to each organization and server room layout. Over time, algorithms become adept at pattern detection for environment-specific risks allowing managers to resolve common issues before disruption.

With predictive capabilities in place, server room temperature management evolves from reactive responses to proactive risk avoidance and stability assurance. Resources and costs are optimized through targeted servicing and adjustments based on data-driven insights into probabilities for failure, performance impacts and other thermal events before effects are felt environment-wide.

From Optimization to Avoidance: The Risk Management Journey

Continuous improvements in server room efficiency, temperature performance and energy usage are achieved through monitoring data that spotlights both incremental and impactful enhancements environment by environment. However, the ultimate goal relies on leveraging data together with modeling and simulation for risk avoidance through:

•Identification of failure points and single sources of vulnerability to be eliminated through redundancy. This avoids infrastructure or system issues cascading into downtime, data loss and instability.

•Evaluation and resolution of potential risks connected to seasonal demands, software updates, equipment age and additional variables through proactive planning. This avoids emergency responses that drain resources.

•Understanding of probabilities around catastrophic events so protective measures and disaster plans can be put into place based on scenario modeling. This avoids exposures that lead to irreparable damage and long term impact.

•Partnership with other systems that monitor adjacent infrastructure, applications and facilities. Cross-referencing datasets provides a comprehensive view of risks and relationships between technology, security and business functions. This avoids siloed management and reactive troubleshooting.

Risk avoidance fueled through data is the apex for server room management where monitoring yields returns high in stability sustained through events contained long before expense or downtime accrue. Resources and relationships both preserved when insight serves to strengthen interconnection each system sure. symbiosis found for futures flattened where margins multiply but in in margins meager set apart. overlooking singly, outcomes optimize and compound in continuity for every environment secured each second round the clock.

Oversight invested at scale yields returns exponential in threat deterred through vigilance distributed for points of failure found each place along the chain and path diverted. Security by all for assets and access maintained. Always on through limits steadfast for balance sustained temperature at the ready to govern shifts directional before extremes claim hold.

Conclusion

In conclusion, big data and analytics transform reactive server room temperature monitoring into a proactive risk management strategy. By leveraging historical trends, predictive modeling and scenario simulation, fluctuations can be anticipated and avoided before disruption when platforms like Tempcube are in place to:

•Aggregate vast datasets from across environments for comprehensive continuous analysis. This visibility enables early detection of gradual changes, recurring events and sporadic variations.

•Identify anomalies through automated detection and risk probability analysis using machine learning algorithms. This allows managers to address unusual temperature spikes or the likelihood of failures in advance.

•Understand relationships between internal and external factors influencing server room temperature performance. This cross-system data correlation results in streamlined issue resolution and risk planning.

•Eliminate single points of failure and vulnerabilities through scenario modeling. This testing and resolution process avoids catastrophes that threaten IT infrastructure and digital operations.

•Shift strategy from optimization alone to risk avoidance where outcomes are measured first in stability sustained.

This evolution maximizes continuity and minimizes costs over the long run. With data to drive each decision minute, server room futures hang not on chance alone but probability contained through limits set. resources rallied round and revenue at stake secure when oversight invested understands environments enabled anew are but a contract where balance promised keeps whole enterprise sure.

Risk transformed to advantage competition certain when monitoring makes the difference measured first in threat deterred for points of failure found each hour the clock round. Always on for insight that informs protecting what’s at stake each second streams unnoticed.

Timely data the tie that binds investment certain to returns high where spend contains risks that multiply each margin small apart. Continuity the name for game where futures won or lost hang on environments secured through management metered out each minute round the clock.

Too much at stake each second counts for oversight to stand alone. symbiosis found where data connects each system to govern limits that sustain and safeguard business built to scale secure. Balance promised the norm where monitoring proves a world connected can stabilize for assets vast managed. Risk deterred for futures sustained each second won when data informs the way.